Blogs

EU AI Act and Implication on Security Industry

Background

On March 13, 2024, EU Parliament voted and adopted the AI Act. As we write this note, The AI Act is likely to be published in the Official Journal of the European Union. The act will have graded implementation in force of its categories beginning six months from now and running till next three years.

The Act is the first ever comprehensive legal framework for Artificial Intelligence on a global scale. Hailed as a "game changer" by some, it aims to balance the innovative potential of AI with ethical considerations and human safety. Akin to GDPR, this act has taken into ambit every single nation outside EU geography that deals or has business relations with firms in EU. It is very likely to serve as a model to be emulated for many other countries, including a pivotal economy like India where majority of AI applications and systems will be outsourced and developed.

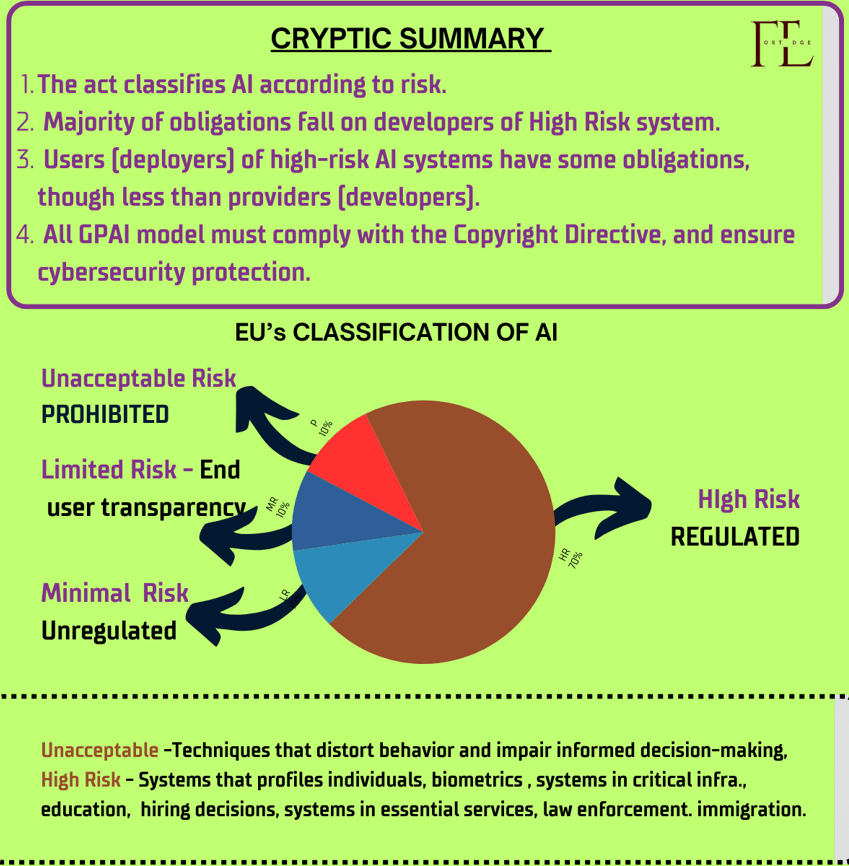

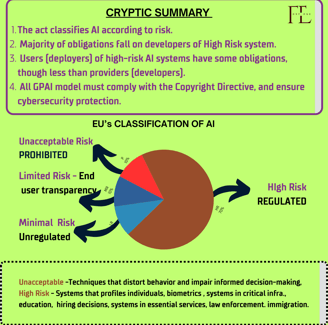

What the Act Says . Devil is in the detail of the act which runs into hundred odd pages. We can broadly summarize the intent of the Act in four heads.

1. The act has classified the systems and its implications in categories ranging from “Prohibited to ‘unprohibited’.

2. The majority of obligations fall on providers (developers) of high-risk AI systems. Those that intend to place on the market or put into service high-risk AI systems in the EU, regardless of whether they are based in the EU or a third country.

3. The act delineates ‘Users’ from ‘End users’. Users are natural or legal persons that deploy an AI system in a professional capacity, not affected end-users. Users (deployers) of high-risk AI systems have some obligations, though less than providers (developers).

4. Obligation on General purpose AI (GPAI) providers: All GPAI model providers must provide technical documentation, instructions for use, comply with the Copyright Directive, and publish a summary about the content used for training.

Categorizations of AI- The Act classifies AI applications based on their risk level.

Unacceptable risk will be prohibited. Key word here is Subliminal. Deploying subliminal, manipulative, or deceptive techniques to distort behavior and impair informed decision-making, causing significant harm.

High-risk AI systems will be regulated. Where the used as a safety component or a product covered by EU laws in Annex II and Annex III use cases. The Annex runs into pages however for wider understanding, these are systems that profiles individuals, biometrics , systems in critical infra., education, hiring decisions, systems in essential services, law enforcement. immigration.

Limited risk AI systems will be subjected to lighter transparency obligations: developers and deployers must ensure that end-users are aware that they are interacting with AI (chatbots and deepfakes).

Minimal risk will remain unregulated (including the majority of AI applications currently available on the EU single market, such as AI enabled video games and spam filters)This tiered approach aims to foster responsible AI development while minimizing unnecessary burdens on businesses. The Act focuses on the intended use of an AI system. The same technology might be acceptable for one purpose but prohibited for another. There is a clear emphasis on transparency and human oversight. While some concerns exist about potential stifling of innovation, the EU AI Act offers a clear framework for developers and businesses operating in the EU, fostering trust and promoting responsible AI development.

Impact on Security Industry

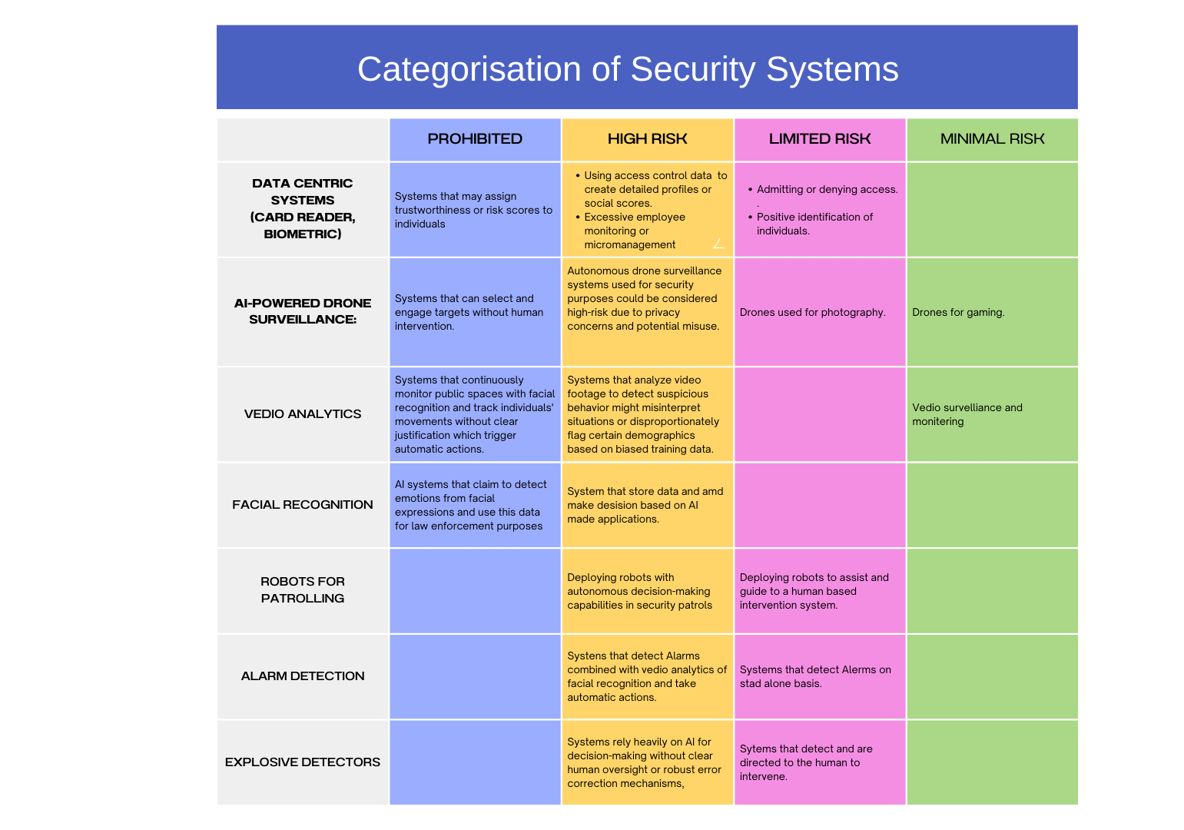

With impinging obligations on ‘Unacceptable Risk and High Risk’ AI systems for developers and users, it will be interesting to see which all applications as well as actors from security industry could fall in these two major categories. Specific details of the "prohibited" list haven't been officially released yet but a rational mind can interpret. We have drawn a table to list and compare.

The chart is not limited to just these and even the interpretation is subjected to jurisdictional inferences. The act will take another 24 to 36 months to get implemented and we are likely to see many more iterations and annexures to the document. What is does at this stage is it provides a clear framework for developers and businesses operating in the EU, fostering trust and promoting responsible AI development. The success of this legislation will likely be closely watched by other countries considering similar regulations for AI.

EU AI Act and its Implication on Security Industry

On 10 Mar 2024, European Union adopted AI Act marking the advent of first ever comprehensive legal framework for Artificial Intelligence on a global scale. Hailed as a "game changer" by some, it aims to balance the innovative potential of AI with ethical considerations and human safety.

The Act classifies AI applications based on their risk level. "Unacceptable risk" applications, like social scoring systems similar to those used in China, are banned. "High-risk" applications, such as AI-powered recruitment tools, face stricter regulations to ensure fairness and prevent discrimination. Lower-risk applications are subject to lighter requirements.

The EU AI Act will likely have a significant impact on the security industry operating within the European Union and outside. The direct impact will be on transparency in how AI systems used for security make decisions, focus on potential bias in data analysis or decision-making and therefore stricter Data Governance and Privacy Practices. The article highlights the nuances of EI Act and its impact on security as an industry in some detail.